Introduction

Imagine, in a world where artificial intelligence (AI) is merging seamlessly into our everyday lives. Then think about a future where fascinating discoveries await, adventures will trending in more directions and environments than ever before. Picture this AI-generated content so perfectly crafted that you cannot tell whether or not it was created by a human or machine. Is pure science fiction–or is this our actual situation? That’s raising all kinds of issues about what’s really true and what isn’t when you’re taking in information from sources around us. 71% of customers prefer human interaction over chatbots or automation.

This blog post dives into the surge of AI in content creation. We’ll break down the telltale signs of AI-generated text, explore the tech behind sniffing out undetectable AI, and tackle the big ethical questions this raises.

In March 2024, Google rolled out a major core update that targeted 40% of spam on the web. We’ll also share strategies to keep the human touch in AI, highlight real-world case studies, and dish out recommendations for the future. Plus, we’ll stress the need for transparency and gaze into the crystal ball to see what’s next for AI content detection.

The Use of AI in Shaping Content

The rise of artificial intelligence (AI) in content creation is changing the digital world in big ways. With each step forward, AI’s ability to create detailed, human-like content grows stronger. From writing chatbot conversations to producing news articles and personalizing social media posts, AI is getting really good at sounding like a human. The overall accuracy of AI content detectors is 67.8%.

This brings up an important question: Can we trust the content we read online? AI’s role in content creation can be both helpful and harmful. It can make content production easier and better, but it also risks making it harder to tell what’s real and what’s not.

The influence of artificial intelligence in creating content is a double-edged sword: on one side, it gives great benefits by making content production faster and more efficient; but it also puts at stake the confidence readers have in what they read online. This is a crucial juncture for all parties involved — writers, readers, ethicists, and developers. We have to strike a balance where AI still makes a contribution while leaving human-created authenticity intact.

Given the continuing development of AI, everyone needs to know first-hand the difference between machine and human content. We have to address its associated ethical issues as well as guard against incorrect information by writing honestly and transparently online.

The challenge and opportunity is to use AI responsibly, so that it adds to the quality of digital content without undermining its credibility.

Identifying AI Generated Text

Spotting AI-generated text versus human-created content can be tricky, but it’s all about noticing certain telltale signs. These “hallmarks” are like red flags that can help you figure out if what you’re reading was crafted by a machine.

Repetitive Sentence Structures

First up, repetition. AI, no matter how advanced, often falls into the trap of repeating sentence structures. Imagine reading a piece where similar phrases and sentence patterns keep popping up. It’s like hearing the same tune over and over again. Humans mix things up naturally, but AI tends to recycle its constructions more frequently. If you catch this subtle redundancy, chances are, you’re looking at AI-generated text.

Lack of Emotional Depth

Next, let’s talk emotions. AI can mimic emotions, but it lacks the depth and nuance humans bring to the table. Picture reading a heartfelt letter from a friend versus a scripted message. The AI’s version might seem flat or a bit off, missing those intricate layers of feeling and context that humans effortlessly weave into their writing. This emotional flatness can be a dead giveaway.

Logical Inconsistencies

Then there’s the logic. AI, despite its ability to handle tons of data, often stumbles when it comes to maintaining consistent logic. You might notice weird jumps in topics, contradictory statements, or parts that just don’t make sense together. Humans usually keep a steady thread of reasoning throughout their writing, but AI can lose the plot, leading to disjointed and confusing content.

Why This Matters

Understanding these signs is super important, especially for researchers and techies developing tools to detect AI-generated content. By focusing on these hallmarks, we can build better systems to tell AI text apart from human writing. This helps keep our digital world honest and authentic, even as AI continues to grow in its role.

So, the next time you’re reading something, keep an eye out for these clues. If the writing feels repetitive, emotionally flat, or logically inconsistent, you might just be dealing with an AI. Spread the word, stay informed, and help ensure the content we consume remains trustworthy and genuine.

The Technology Behind Detecting Undetectable AI

Undetectable AI is a fascinating solution that blends advanced machine learning with natural language processing (NLP). This tool goes deep into text to spot the subtle differences between human and AI writing, using powerful algorithms and vast datasets to detect the patterns that reveal a machine’s touch.

The Core of Detection

From the start, are customized algorithms that analyze language and style. Think of NLP techniques like tokenization, sentiment analysis, and syntactic parsing as the tools that dissect text, looking at how words are used, the emotion behind them, and the structure of sentences. These algorithms check for coherence, complexity, and unique quirks, comparing them to what’s typical for human and AI writing.

Adversarial Machine Learning

A new and exciting part of this technology is adversarial machine learning. Here, AI systems are designed to challenge and test detection algorithms constantly. It’s like a game of cat and mouse, where detection systems are exposed to new and evolving AI-generated content, helping researchers refine and improve their models to keep up with AI’s advancements.

Semantic Analysis

Another key piece is semantic analysis. Older methods focused on surface features of the text, but modern systems dig deeper into meaning. They look at context, idioms, and the subtle nuances that make human writing unique. By understanding these deeper elements, detection tools can better distinguish between genuinely human content and AI-generated text.

A Multi-Disciplinary Effort

This work isn’t done in isolation. It requires collaboration from experts in computer science, linguistics, psychology, and data analytics. As AI continues to evolve, so too must the technologies we use to ensure our digital content remains authentic and trustworthy.

This blend of cutting-edge tech and human expertise is crucial for maintaining the integrity of our digital world. Stay informed about these advances, support efforts for transparency, and help promote trust in the content we encounter every day.

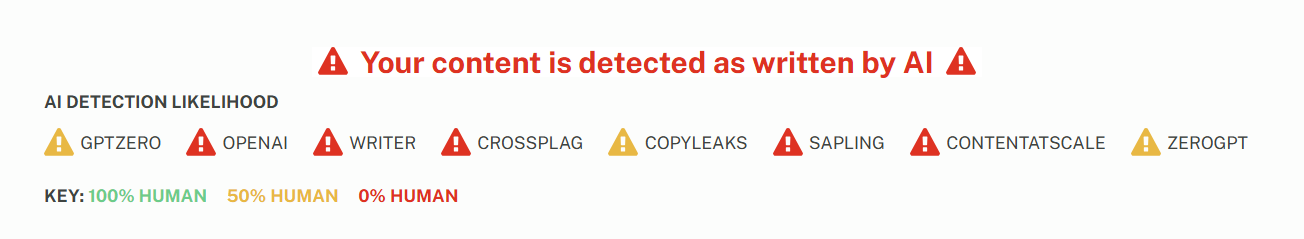

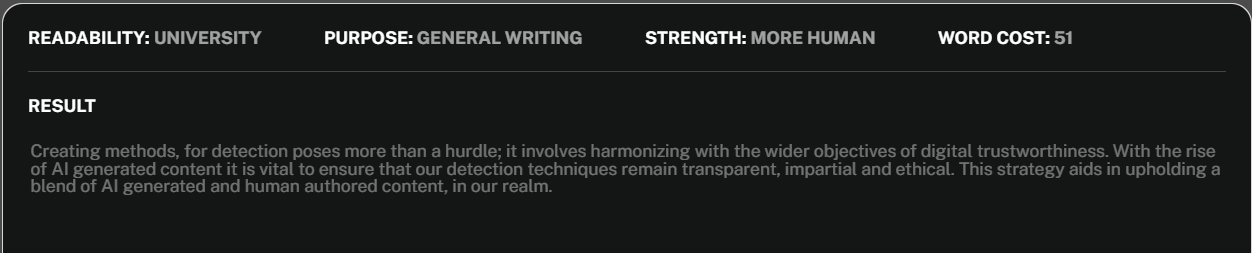

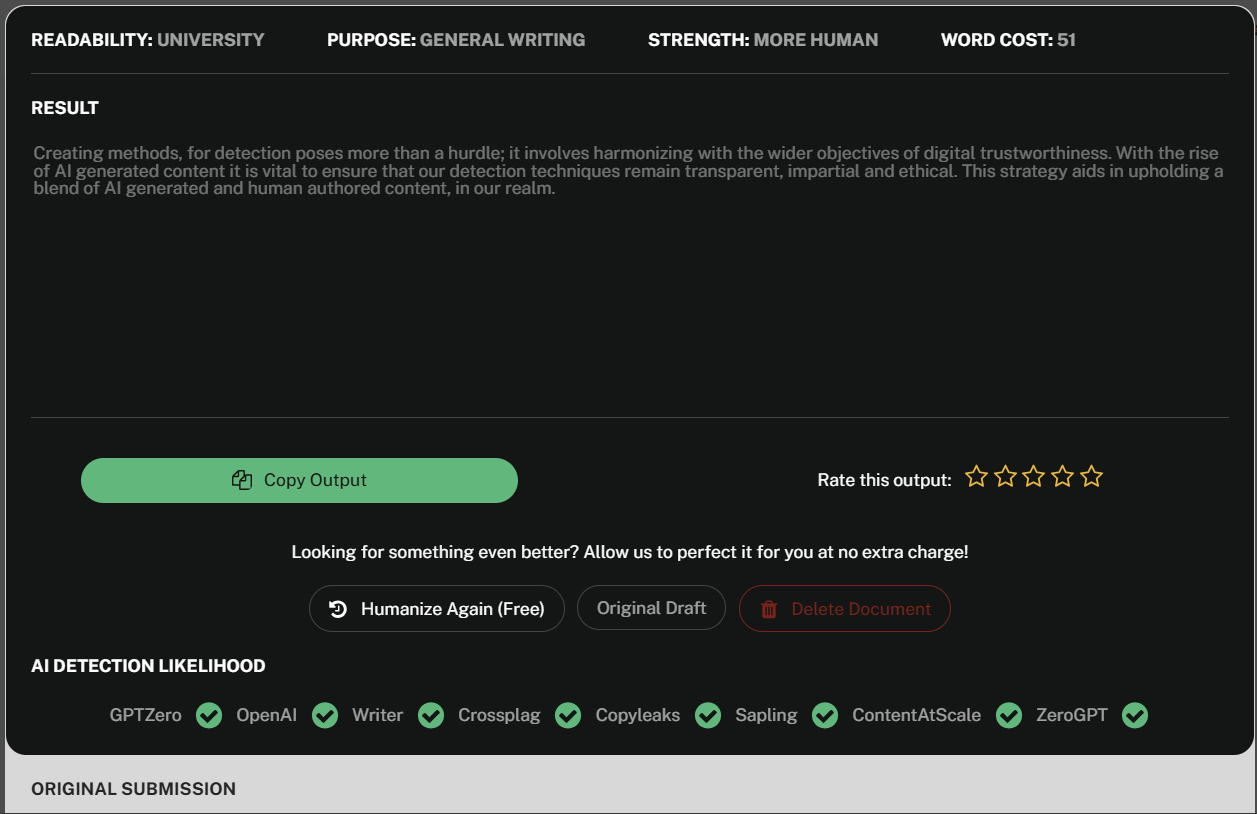

Undetectable AI: The Solution that Detects and Humanizes AI

As AI-generated content becomes more sophisticated, the challenge of distinguishing between human and AI-created text intensifies. Enter Undetectable AI, a groundbreaking solution designed to humanize AI-generated content and make it virtually undetectable by standard detection tools.

Key Features

1. AI Stealth Writer: The AI Stealth Writer is the centerpiece of Undetectable AI, offering the ability to rewrite AI-generated text to sound more human-like. This tool leverages deep learning and natural language processing to seamlessly integrate AI-generated text with human-created content. It ensures that the output not only meets grammatical standards but also captures the nuances of human expression, making detection by algorithms nearly impossible.

2. Human Typer Tool: This feature simulates human typing patterns, further reducing the chances of detection. By mimicking the natural flow and rhythm of human typing, it adds another layer of authenticity to AI-generated content.

3. Advanced Linguistic Models: Undetectable AI uses advanced algorithms and linguistic models to refine AI-generated text. These models continuously learn and adapt, improving their ability to produce content that closely mirrors human writing. This includes understanding and incorporating cultural context, idiomatic expressions, and subtle language nuances.

Benefits

1. Enhanced Authenticity: By humanizing AI-generated text, Undetectable AI ensures that the content is more relatable and engaging for readers. This authenticity is crucial for maintaining trust and credibility in digital communications.

2. Avoid Detection: With tools designed to bypass even the most advanced AI detectors, Undetectable AI allows users to use AI-generated content without the risk of it being flagged or penalized. This is particularly valuable for businesses and individuals who rely on AI for content creation.

3. Improved Content Quality: The continuous learning loop of Undetectable AI’s models means that the quality of the AI-generated content keeps improving. This results in text that not only passes as human but also resonates better with its intended audience.

4. Ethical AI Use: Undetectable AI emphasizes transparency, fairness, and accountability in its design and use. This ethical stance ensures that the technology is used responsibly, aligning with broader societal values and norms.

Real-World Applications

Election Integrity: During critical election periods, Undetectable AI’s detection systems have been pivotal in filtering out deceptive AI-generated misinformation, showcasing its potential to protect information integrity on digital platforms.

Academic Integrity: While some AI detection tools in academic settings have struggled with false positives, Undetectable AI continuously refines its algorithms to ensure fair and accurate detection, thus supporting the integrity of educational assessments.

Undetectable AI stands out as one of the more credible options offering innovative tools that ensure AI-generated text is both high-quality and indistinguishable from human writing. By embracing these technologies, we can enhance the synergy between AI and human creativity, paving the way for a future where digital content remains authentic and trustworthy.

Ethical Considerations in AI Content Detection

The world of AI content detection is full of ethical challenges that need careful attention to keep the technology trustworthy. Here are the key areas I would recommend to focus on:

Privacy Concerns

First off, user privacy is a huge deal. To detect AI-generated content, we need to analyze a lot of text. But where does this text come from? Are we getting consent from the people whose content we’re analyzing? Ensuring privacy measures are strong and clear is crucial for building trust in these technologies.

Potential for Bias

Next, there’s the issue of bias. AI detection systems are only as fair as the data they’re trained on. If the training data has biases, these can be baked into the algorithms, leading to unfair outcomes. For example, content from minority groups might be flagged as AI-generated more often due to these biases. To combat this, we need to carefully select and continually review our datasets and refine our algorithms.

Accountability

Accountability is another major concern. As AI detection systems become more critical in telling human content apart from AI-generated content, we need to be sure about their accuracy and reliability. Clear lines of accountability for the decisions made by these systems are essential. This means being honest about their limitations and having ways to address mistakes, like false positives or negatives.

Intellectual Property

Finally, we must respect intellectual property. AI systems are getting better at creating content, but we need to make sure they aren’t stepping on the creative rights of individuals. Balancing innovation with respect for intellectual property is tricky but necessary.

Multidisciplinary Approach

Handling these ethical challenges requires input from various fields: technology, law, ethics, and sociology. Only by working together can we ensure that AI content detection is ethical, responsible, and in line with societal values.

Stay informed and engage with these ethical considerations. Support transparency, push for fairness, and help promote responsible use of AI. By doing so, we can harness the power of AI while upholding the integrity and trustworthiness of digital content.

Humanizing AI: The Path to Coexistence

The integration of AI into our lives needs a thoughtful approach to ensure it enhances rather than replaces the human experience. Whether we like it or not, AI is here to stay. It’s not a binary decision, which is “Yes we need it” or “No, we don’t”. It’s all about co-existence. Here’s how we can do that:

Human-Centric Design

First, we need to focus on human-centric design principles. These prioritize people’s needs, perspectives, and well-being in the development of AI technologies. It’s about creating AI that is not just efficient but also compassionate and inclusive.

Educating the Public

A big part of humanizing AI is helping people understand it better. Educational initiatives that demystify AI make its workings more accessible and relatable. By boosting AI literacy, we empower people to engage with AI confidently and critically, closing the gap between human intuition and artificial intelligence.

Transparency and Accountability

Transparency and accountability are crucial. People need to trust that AI operates ethically and respects human dignity and autonomy. Clear accountability mechanisms are essential to address any negative impacts or ethical issues that arise, ensuring AI developers and users are responsible for their creations.

Collaboration Across Fields

Collaboration between diverse stakeholders—tech experts, ethicists, sociologists, psychologists—is vital. These multidisciplinary efforts bring varied perspectives that help shape more empathetic and fair AI systems.

A Dynamic Process

Humanizing AI is an ongoing journey. It requires constant attention to how AI interacts with human life. The goal is to design AI that complements and enhances human abilities and experiences, ensuring AI acts as an ally, not a replacement.

It’s important to commit to these principles as we advance AI technologies. By focusing on human-centric design, boosting AI literacy, maintaining transparency and accountability, and fostering collaboration, we can ensure AI serves to enrich our lives and maintain our humanity.

Case Studies: Successes and Setbacks in AI Detection

Exploring how AI detection technologies work in the real world gives us a clear view of both their successes and the bumps in the road ahead. Let’s look at some real-life examples to see how innovation and the need for accuracy play out in this field.

Election Integrity

One success story comes from a top tech company that created an advanced AI detection system. This system, using deep learning and natural language processing, could spot AI-generated text with impressive accuracy. During a crucial election period, it was key in flagging and filtering out misleading AI-generated misinformation. This achievement showed how AI detection can protect the integrity of information on digital platforms.

Academic Integrity Challenges

On the flip side, there’s a case involving an AI detection tool meant for academic settings. It aimed to differentiate between student-written assignments and those created by AI. However, it hit a snag with false positives, mistakenly flagging genuine student work as AI-generated. This mistake caused unnecessary stress for students and highlighted the complexity of AI content detection. It underscored the need for ongoing improvements to make these systems more reliable and fairer.

Social Media Moderation

Another example is a social media platform that tried to use an AI content detector to automatically moderate posts. While it was good at catching obvious AI-generated content, it struggled with more sophisticated cases where AI text was heavily edited by humans. This challenge showed the current limits of detection systems when dealing with mixed-content scenarios and pointed to the need for more refined detection capabilities.

Key Takeaways

These case studies highlight the dual nature of AI detection technology. On one hand, it has great potential to improve digital ecosystems by keeping information accurate and trustworthy. On the other hand, there are still significant hurdles to overcome to make these systems as effective and fair as possible.

As AI technologies evolve, these real-world examples provide valuable lessons for researchers and developers. They remind us of the importance of continuous refinement and the need to balance innovation with ethical considerations. By learning from both successes and setbacks, we can move closer to creating AI detection solutions that truly enhance our digital lives.

Preparing for the Future: Strategies and Recommendations

As we enter a new era of content creation dominated by undetectable AI, it’s clear we need a thoughtful and strategic approach to navigate this complex landscape. Here’s how we can chart a forward-looking course:

Invest in AI Detection Research

First, we need to pour resources into ongoing AI detection research. This isn’t just about creating advanced machine learning models and NLP tools, but also exploring new technologies that offer fresh ways to detect AI-generated content. As AI techniques evolve, our detection methods must be just as dynamic and innovative.

Foster Collaboration

Second, collaboration is key. We need strong partnerships between academia, industry, and regulatory bodies. By sharing knowledge, resources, and best practices, we can accelerate progress in detection capabilities. These collaborations also ensure that ethical considerations are built into AI systems from the start, tackling both technical challenges and ethical dilemmas.

Promote Transparency and Accountability

Transparency and accountability are crucial. Developing standards and frameworks that require the disclosure of AI use in content creation helps maintain the integrity of digital spaces. Implementing labeling systems for AI-generated content can give consumers the information they need to critically evaluate what they read and see.

Develop Ethical Guidelines and Regulatory Oversight

Ethical guidelines and regulatory oversight are essential. These measures should focus on protecting user privacy, preventing discrimination, and ensuring the responsible use of AI in content creation. Setting these foundations builds trust and safety in our digital ecosystem.

Embrace a Multifaceted Strategy

Moving forward, embracing these strategies will be key. We need to prepare for a future where undetectable AI and human creativity coexist. By investing in research, fostering collaboration, promoting transparency, and developing ethical guidelines, we can ensure the responsible evolution of content creation in the digital age.

It’s time to act. Support these initiatives, engage with stakeholders, and push for transparency and ethical practices in AI. Together, we can navigate this new landscape and make sure AI serves to enhance, not undermine, the integrity of our digital world.

The Importance of Transparency in AI Usage

In the world of artificial intelligence, transparency is more than just a good practice; it’s the foundation for ethical AI use. Embracing transparency builds trust and integrity, essential for society to accept and integrate AI technologies. Developers and companies must clearly explain how AI is used, how decisions are made, and the influence of AI on content creation and distribution.

Clear and Accessible Explanations

Transparency means more than just saying AI is involved; it requires making AI processes understandable to everyone, not just specialists. This involves breaking down complex AI algorithms into plain language, so users can see how AI decisions are made. When users understand these processes, they can better judge the origin and reliability of AI-generated content.

Ongoing Dialogue

Transparent AI use also involves continuous conversation between developers, users, and other stakeholders. This dialogue should cover not only current uses of AI but also how future applications might affect society. Engaging with various perspectives helps spot and address potential biases and inequities in AI systems.

Ethical Implications

Discussing the ethical implications of AI in content creation is crucial. Companies need to be open about how they ensure AI-generated content aligns with societal norms and values and how they prevent the spread of misinformation. This openness helps maintain a high standard for digital content.

Commitment to Transparency

Prioritizing transparency in AI use is key as AI continues to shape our digital world. By focusing on clear communication, ongoing dialogue, and ethical practices, we can ensure AI enhances human creativity and integrity. This approach keeps the digital ecosystem a place of authentic and trustworthy information.

In summary, support initiatives that promote clear, accessible explanations of AI processes. Engage in dialogues about the ethical use of AI and advocate for standards that ensure AI-generated content is reliable and trustworthy. By doing so, we can build a future where AI and human creativity work hand in hand to create a better digital world.

The Evolution of AI Content Detection: What Lies Ahead

The future of AI content detection is filled with immense potential and significant challenges. As AI gets better at creating content, the race to detect it becomes more intense. The next steps involve developing more refined and sophisticated detection methods that use advanced machine learning and natural language understanding to spot the subtle differences between AI-generated and human-written text.

Real-Time Adaptation

To stay ahead, detection methods must adapt in real-time to the ever-changing tactics of AI content creators. This requires cutting-edge research in deep learning and enhanced computational linguistics. We also need to dive deeper into the context and meaning of text through semantic analysis, moving beyond the surface-level checks that current methods rely on.

Collaborative Efforts

The way forward involves a team effort. Technologists, ethicists, and policymakers need to work together to create detection frameworks that are not only effective but also ethical and socially responsible. This multidisciplinary collaboration ensures that the evolution of AI content detection supports digital trust and authenticity.

Ethical and Social Responsibility

Building detection methods isn’t just a technical challenge; it’s about aligning with broader goals of digital integrity. As AI-generated content becomes more common, ensuring that our detection methods are transparent, fair, and ethical is crucial. This approach helps maintain a balanced coexistence of AI-generated and human-created content in our digital world.

To achieve these goals, we must invest in advanced research and foster collaborations across different fields. Support initiatives that push the boundaries of AI content detection while keeping ethical considerations at the forefront. By doing so, we can create a future where AI and human creativity coexist harmoniously, enhancing the quality and trustworthiness of digital content.

If you liked this article, please share it and subscribe to my website. For consulting work, please visit my website, Shift Gear and I would be glad to help you in your requirement.

Check this also –10 Use Cases for Microsoft Copilot: Transforming Your Business – Information Technology Trends & Current News | Shift GearX

You will also love this – Remaker.ai: A Detailed Review of the Latest AI Technology – Information Technology Trends & Current News | Shift GearX